What are Layer 2s?

Today, Layer 2s (L2s) are touted as one of the holy grails of scaling solutions, yet many people do not realize that L2s are in fact separate blockchains used to compress data to fit more transactions in each L1 block. To maximize the impact of a L2, you can use up to 100% of the L1 block space for the L2.

However, the fundamental bottleneck remains scaling the amount of data propagated at the L1. The same compression and transaction batching of L2s can be applied to a much more performant chain than Ethereum for the same % increase in performance applied to much bigger blocks. As such, a conversation about the potential of L2s is also a conversation about L1 design.

L1s and L2s share the same components (they are both blockchains), meaning we will need to optimize for the correct features to achieve the desired properties and performance of each. To gain an understanding of L2s and their impact, we will examine some of the tradeoffs they involve and how these impact user and developer experience, critical items on the path to mass adoption.

Imagine once every second a moving truck pulls up in front of your house and pauses for a second, then leaves. Another truck then takes its place and the cycle repeats nonstop. You can think of each truck as a block that is filled with data before being submitted to all nodes for approval. Next generation blockchain designers have the interesting challenge of writing software to pack blocks with data in a second or less. But what happens when the blocks start to get full? Enter the Layer 2s (L2s).

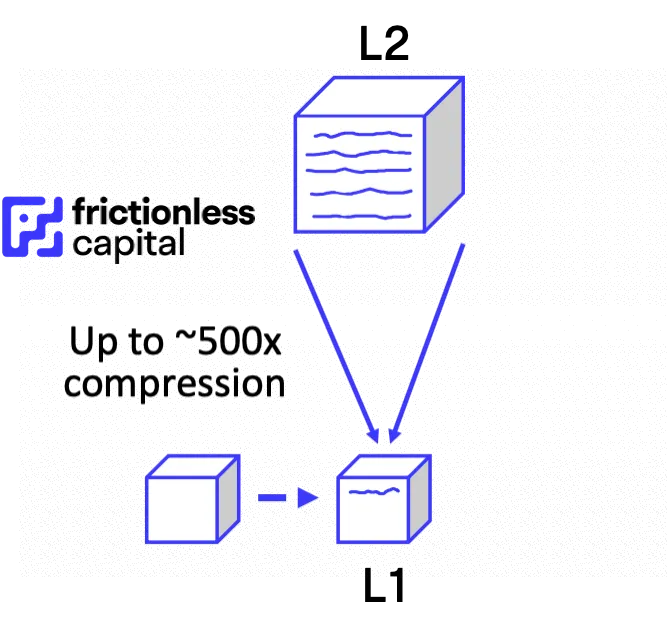

L2s are separate blockchains that extend the base layer and inherit its security guarantees, allowing the L1 to use scarce block space more efficiently by batching or grouping many transactions into one before submitting down to the L1, using less data and reducing gas fees significantly.

We stress that L2s are, in fact, separate blockchains because as L2s continue to decentralize by adding multiple sequencers you will need to establish quorum and consensus between them.

In fact, L2s have their own execution environment, data propagation, consensus algorithm, and potentially storage like any other blockchain due to the quorum.

The simplest way to explain the role of L2s (aka “rollups”) is that they act as data compression as illustrated in the diagram below:

It is important to note, while L2s allow you to fit more data into a block they do not enable limitless block space. Phrased differently, if a L2 compresses data and 100% of L1 block space is used for the L2, the overall block space is used more efficiently but the raw amount of data at the L1 is unchanged. This concept is illustrated below with various block sizes being compressed by 500x

Blockchain A: 1.3MB block * 500 becomes 650MB effectively

Blockchain B: 100MB block * 500 becomes 50,000MB or 50GB effectively

Blockchains A and B are still propagating 1.3MB and 100MB blocks respectively at the base layer.

This concept is really quite powerful. This technique can even be replicated in another layer above the L2, with an L3, allowing an additional layer of data compression settling down to the L2, and finally settling down the L1. However this would only further amplify some of the negative aspects of L2s which we will address in this piece.

What role do L2s play in mass adoption?

If L2s are such an innovative technology and they increase the performance of the underlying L1, why not just add a separate L2 to every chain and call it a day? In reality, few tradeoffs are without cost, and L2s are no exception. This article will dissect some of the nuances behind L2s in the context of user and developer experience, which are two key items on the path to mass adoption.

In order to onboard millions or billions of users who are accustomed to a seamless Web2 experience, the pain points of Web3 need to be abstracted away. This abstraction by clever engineers creates a smooth and self-explanatory layer built for the lowest common denominator of users. For all their merits, L2s introduce friction, and fragment the user experience in a time when we should be looking to smooth resistance points, not create them.

What are some of the drawbacks of L2s from the user and developer perspective?

- Bridging is cumbersome and risky

- “Finality” is only final on the base layer

- High latency equates to poor design & UX

- Layers create a fragmented experience

- Loss of composability hurts compounding of innovation

In order to transact on the L2, one must first bridge funds from another blockchain to the Layer 2. Bridging is currently one of the most at-risk activities in all of crypto, with Chainanalysis reporting an estimated $2B has been lost in cross-chain bridge exploits in 2022 alone. Anyone who has used a bridge can agree it is a cumbersome and painful process, which usually involves minutes of waiting for funds to arrive, crossing your fingers you don’t lose your money, and adding a token account for funds to appear (net of hefty fees!) in a non-custodial wallet such as Metamask.

If this wasn’t enough, additional steps of unwrapping tokens are often needed using a dex (decentralized exchange) or a wallet’s swap feature to finally obtain the desired coin or token. In short, this multi-step process leaves much to be desired and is complicated to explain even to a crypto-native Web3 user, let alone someone who has no idea what confirmations or a block explorer are…

From the developer side, imagine the complexity of writing a contract that involved initiating a transaction that begins on the L1, transfers assets to the L2, carries out an operation there, then settles back down to the L1. This added complexity for developers and users should be reserved only for a situation where no other alternative exists, as a last resort, not as the standard offering for the vast majority of transactions as some may suggest.

Moreover, a transaction cannot completely be considered final until it settles down to the L1 and is confirmed. Ethereum does a block every ~12 seconds meaning a L2 transaction could take 12s or more to be finalized. For many users, those doing actions that are not critical or time sensitive, perhaps this is an acceptable time to finality (TTF).

Imagine, a leverage trader who is seconds away from liquidation, unable to close his position in time, this could be a game-breaking factor in their decision to opt for one chain over another. Once again, the scope and expanse of what product & application engineers can design is handicapped by slow finality. It is undoubtedly one of the aspects of user experience that people feel most acutely.

Another effect of using a L2 is high latency which is associated directly with poor user experience. Low network latency is paramount to mass adoption. How do we expect to compete with mainstream massively multiplayer games and virtual experiences when every action has a felt delay or “lag” before completion. Faster and more synchronized experiences are better, in virtually every situation imaginable.

Building good tech is hard, but building a durable social layer can be an even greater challenge. What does fragmenting user activity across various L2s mean for organic community building required to create a healthy ecosystem? What if instead of building bridges between dApps, NFT projects, and their communities L2s are building walls? This effect shouldn’t be ignored.

For developers, as completely separate blockchains, L2s have upstream dependencies to the L1. This means any change in the underlying L1 has to be reflected in the L2. Imagine constantly having to debug and check your code because some minor update was pushed on the L1. It grows tedious and tiresome, and the possibility for careless mistakes is almost endless.

Occam’s Razor dictates that the simplest solution with the fewest number of moving parts is usually the best, and blockchains are no exception.

Furthermore, what does loss of composability between L1 and L2 mean for developers, who are unable to natively use existing resources as building blocks and program them into higher order applications? Loss of composability between L1 and L2 is hugely detrimental for developer experience and this can have massive second-order effects on the long-term compounding of innovation in an ecosystem.

L2s help commoditize block space but at a steep cost

At a high level, it is clear L2 technology will be a mainstay in blockchain, but we challenge the notion that widespread L2 adoption leads us down the path of mass adoption at this point in time. Simply put, any blockchain, such as Ethereum that relies heavily on a L2 solution so early in its life cycle was simply not built to scale.

Top ETH dApps such as Uniswap are only seeing a few hundred thousand monthly active users. This is truly a drop in the ocean when compared to mainstream Web2 applications like TikTok or YouTube, with over 1 billion monthly active users.

Basing your roadmap on L2s and other scaling solutions so early on essentially amounts to a Faustian Bargain, sacrificing user experience for performance and affordability. What is gained in some areas is certainly lost in others, and user experience does not consist of just one metric, but rather the general impression the average user gets from interacting with the chain and its applications.

So when then do L2s become a necessary component to support next generation blockchains? The answer is pretty clear, when block space goes from being a commodity to a luxury, and when product & application innovation has been pushed to the limits of what is possible on the L1.

Optimizing for the correct things is key, with or without L2s

Blockchain architects who solve for decentralization over performance have created a situation where L2s, with all of their drawbacks, are one of the only avenues left for them to retrofit their legacy technology with much needed performance upgrades.The ideology which continues to opt for decentralization past the point of massive diminishing returns, at the expense of performance is also likely to carry this bias to the L2 level, solving for multiple sequencers, and continuing even further.

Why have just 2 sequencers when you can establish consensus between 30 or 50 or 100? In the quest for decentralization above all, key tenants of performance and user experience have been thrown out. Every blockchain makes its respective tradeoffs but few will scale to support mass adoption. Thus, next generation blockchains offer the best chance we have today.

Next generation blockchains have all of the benefits of L2 data compression available to them but can continually compound innovation with native composability on the L1 until the day when a L2 solution is necessitated. Since we have established that L2s are separate blockchains, the benefits of pushing L1s to their physical limit also accrue to L2 design. It is likely the most performant L2s share many features like execution environment with highly performant L1s.

What’s next? We keep building, keep innovating, keep pushing L1 and L2 tech forward, and onboarding as many users as we can. The most successful blockchains will have an appreciation for the subtleties of what L2s are and aren’t, where they can be useful, and where they do more harm than good.

L2s are just another tool on the path to mass adoption. In our journey we can’t rely too heavily on one tool or another, and must remain objective to our biases towards legacy technologies which form the paragon of legacy technology but are not ultimately the future of blockchain tech.

We expect the performance of next generation L1s to far outpace the performance of legacy blockchains like Ethereum 2.0 with full L2 utilization. Until next generation blockchains require a L2 solution, users and developers will enjoy relatively costless performance.